Asking whether AI models on digital hardware like large language models are conscious is not silly because we can't build artificial consciousness or because the brain is magical and the only piece of machinery that can be conscious, but because it shoehorns in the idea that consciousness is merely representational, abstract or a by-product that magically appears regardless of a system’s architecture.

It's odd to just assume large language models like GPT-N have consciousness just because they process some abstractions coherently. They lack any physical structure for processing consciousness and the required input/output or architecture to support it. There is literally no circuitry built to spawn affect, feelings, sentience or anything we know nature evolved in brains and nervous systems. Without looking at the layers of organization, that is to say - what's actually being processed and how, assuming LLMs are conscious or might be conscious “just because”, quickly amounts to scientific illiteracy.

While it could be the case that consciousness is just representational, and while conscious is a nebulous suitcase term as Marvin Minsky said, the idea that consciousness can just live as a representation is quasi-magical and not in line with scientific evidence that consciousness is selected for as a physical mechanism and realized physically, not merely as an abstraction. The mind deals with abstractions, but can it entirely exist as an abstraction itself? That’s quite the leap to make.

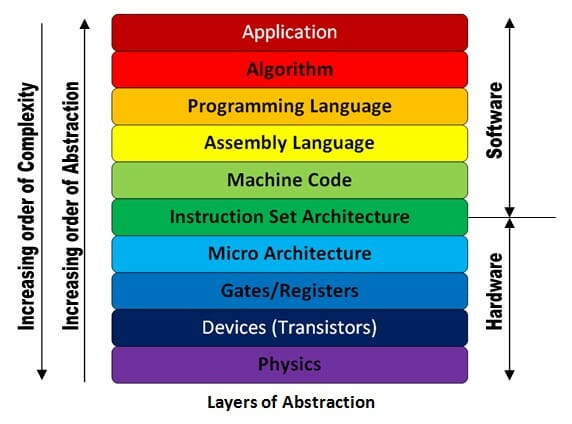

Flipping 0s and 1s with several abstraction layers built on top to just present representations to the end user is unlikely to magically going to spawn consciousness, as the evidence indicates consciousness requires a few (positive) feedback loops with supporting physical mechanisms, signaling and architecture.

You need to explicitly build it or use architectural paradigms that allow for it to emerge. If we were to think about it as engineers instead of poorly philosophizing whether their the latest off-policy actor-critic deep RL algorithm running on digital hardware happens to be conscious, we’d have a starting point to discuss. As far as I’m concerned, panpsychism is a non-starter.

Von Neumann architecture is built around the idea to abstract the hardware away and underlying stuff to have universal circuitry do our bidding. That's the whole point of it. Digital computing is incredibly useful and powerful precisely because it ignores the hardware so that we can build abstractions on top of that. But as far as we know, consciousness is implemented at the hardware level. From the many documented cases which amount to an affliction of consciousness, due to brain damage or structural changes, use of drugs like LSD or science on how anesthesia affects consciousness gradually, or the God Helmet, it’s clear enough that consciousness operates on physics, and is processed across layer of organization over its substrate down to the molecular level.

So if you've constrained your hardware to do your bidding without the ability to enlist it in the processes like in the brain, you've likely already lost before you got started.

Hardware is not software.

Too many seem be too quick to assume the mind is “simply” software. Software is just a representational concept that builds upon constrained state changes on a substrate, that thanks to our current (past century) engineering paradigms that have resulted in the abstraction layers we have from bottom all the way to top, have very little to do with what the brain is and how it functions. The brain is both the hardware and software in one. Our current engineering paradigms don’t translate to how the brain functions, which is the only implementation of consciousness we know. This is again - not because only brains or organic material can be conscious or all implementations of consciousness must mimic the brain fully - but the brain is the only piece of hardware we know actually implements it and while we have a mountain of evidence for the neural correlates of consciousness and lots of indirect evidence for how it’s physically processed - we have exactly zero evidence for the idea that we can turn this physical, analog computing device into an abstraction and call it conscious.

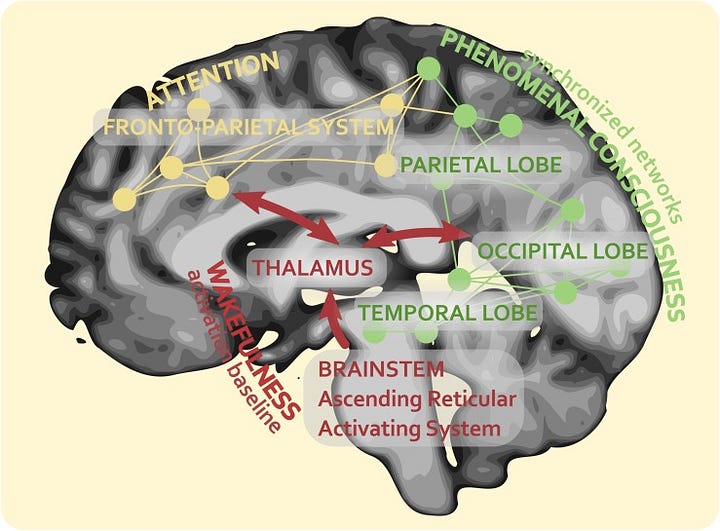

As this elaborate paper on the neural correlates of consciousness describes, consciousness and attention are distinct and separate processes in the brain that are closely related. Consciousness is responsible for creating a coherent picture of reality, while attention focuses on relevant objects of thought. There are two dimensions of consciousness: wakefulness and content, and two types of consciousness: phenomenal (how the world appears) and access (when contents are available for focus). The neural correlates of consciousness are generated by the activity of sensory and associative networks in the temporal, occipital, and parietal cortices. The neural correlates of attention are generated by a fronto-parietal system with two main networks: the DAN and the VAN. Both of these networks partially overlap in the parietal association networks. While distinct and separate, both consciousness and attention serve each other: attention helps consciousness acquire cognitive relevance, and consciousness helps attention provide focal awareness and enrich our experience.

And so, my suggestion is to apply those principles as we attempt replicate it and don’t assume randomly that the vague idea of “information processing” does the job. Gualtiero Piccinini does an excellent job of laying out how sloppily terms like information processing/cognition/computation are used in (cognitive) science:

Computation and information processing are among the most fundamental notions in cognitive science. They are also among the most imprecisely discussed. Many cognitive scientists take it for granted that cognition involves computation, information processing, or both – although others disagree vehemently. Yet different cognitive scientists use ‘computation’ and ‘information processing’ to mean different things, sometimes without realizing that they do. In addition, computation and information processing are surrounded by several myths; first and foremost, that they are the same thing.

It’s hard to understand why many just assume consciousness is software or shrug at considering the actual physical implementation of a mind. Perhaps it hasn't sunk in with some people that the brain is effectively in a vat?

Being surrounded by cerebrospinal fluid helps the brain float inside the skull, like a buoy in water. Because the brain is surrounded by fluid, it floats like it weighs only 2% of what it really does. If the brain did not have CSF to float in, it would sit on the bottom of the skull. It effectively sits in a sensory deprivation tank so it can process all other senes (ironically), "deprived" of senses, which is why you can perform open brain surgery on a person who is wide awake.

Surgeons can just cut into your brain and you will feel nothing, except that the cutting can impede function and for this very reason is done with people in a wakeful state, so they can check whether they can still speak, for example.

Perhaps this seduces people to think of their mind as something floating in processing land and so anything that processes information will do and they can just be replicated that way. It makes the brain less “tangible” than the rest of our body. But I stress it’s a physical processing device, even if you can’t feel it the way you feel your body.

Those who hold the view that a random digital system can be conscious will sometimes violently object against dualistic notions of consciousness and various other concepts they consider magical, yet they are themselves completely lost in abstraction and subscribe to magical ideas, since they think the mind exists somewhere in "information space" and regardless of processes and architecture, consciousness magically appears.

The onus is on the claimant who thinks this, as this not in line with any evidence we have at this moment for the only implementation of consciousness we know of.

Integrated Information Theory is one of the approaches to classifying consciousness that acknowledges there must at least be constraints on architecture supporting consciousness:

Integrated Information Theory (IIT) offers an explanation for the nature and source of consciousness. Initially proposed by Giulio Tononi in 2004, it claims that consciousness is identical to a certain kind of information, the realization of which requires physical, not merely functional, integration, and which can be measured mathematically according to the phi metric.

See also their 2016 opinion article:

We begin by providing a summary of the axioms and corresponding postulates of IIT and show how they can be used, in principle, to identify the physical substrate of consciousness (PSC). We then discuss how IIT explains in a parsimonious manner a variety of facts about the relationship between consciousness and the brain, leads to testable predictions, and allows inferences and extrapolations about consciousness. The axioms of IIT state that every experience exists intrinsically and is structured, specific, unitary and definite. IIT then postulates that, for each essential property of experience, there must be a corresponding causal property of the PSC. The postulates of IIT state that the PSC must have intrinsic cause–effect power; its parts must also have cause–effect power within the PSC and they must specify a cause–effect structure that is specific, unitary and definite.

Now, I am not convinced about IIT as there are various issues with it that I won’t go into here, but as a pursuit to formalize and come up with ways of measuring and concretizing consciousness, the effort is to be applauded. Specifically, insisting we look at the causal relations of substrate and the actual physical processing is excellent.

One of the best ways to tease out one’s assumptions regarding this issue is to consider a human brain functionally running on a YottaFLOPS (lots of computational power) laptop and start figuring out how this would be problematic for that mind versus what it means to be an embodied physical brain. Some will even not blink if you suggest the mind running on an wooden abacus, or various other arbitrary devices, materials or the China Brain, just because they satisfy theoretical models of computation. But there really is a difference between a description and the described, or a simulated dynamical model and a physical instantiation of it. And to figure out this conundrum, one has to carefully analyze these differences. Is a digital brain equivalent to the physical version of it? How does it causally relate to the world?

“Digital computers can simulate consciousness, but the simulation has no causal power and is not actually conscious,” Koch said. It’s like simulating gravity in a video game: You don’t actually produce gravity that way.

Anyone who considers a mind running digitally being equivalent to the brain, should think hard about how this is nothing like a physical brain in a body, what's missing, and how it's different. Simulated fire does not burn. Nothing we simulate in fact has the same causal relations to the world like physical equivalents. Sometimes this doesn’t matter. If we only care to encode information and then just reproduce it, like with sound, it may not matter. But does this apply to the entire brain and body? We have to really step out of Michio Kaku-level fantasies about mind uploading and look at the science: neuroscience, biophysics, physics. What does the evidence tell us?

Some insist we can never know and should only look at the behavior. The problem with that is that we're very easily fooled. Look at the Turing Test, for example. For over a decade I’ve often said the Turing Test is a test of natural gullibility, not artificial intelligence, because it’s designed to fool an observer rather than really test capability and underlying mechanisms.

In fact, I think the Turing Test belongs to the class of tests one thinks of before one has created that which one would like to test, before one knows how to. Imitation game is right and imitation will be lethal in our future as it'll be very easy to imitate entities that act the same as us or in a similar fashion, but have very different internal constitution and capabilities!

This is why psychopaths can be wildly successful on society, even though they represent a tiny subset of generally intelligent entities deviating from human neurotypicals.

There are many vectors of attack here, to put it in adversarial terms. We have countless biases, constrained senses, lacking senses, an processing deficiencies that are easy to exploit in general and will be fantastically easy to exploit for AGIs.

Something like a Turing Test or the way we evaluate behavior and make assumptions is like shaking a container to see if there’s water in it, by just listening if it is sloshing. It could very well be 100 other types of fluid, sloshing will perhaps tell the human ear something about its viscosity, for example. But it’s not actually a proper check of the content, is it? This sort of indirect and by proxy testing will not do and it’s dangerous to adopt these sorts of approaches as sufficient.

What this amounts to is that I think we have to come up with proper tests for agency, sentience and intelligence. Some of these future tests may still depend to varying degrees on proxies, but much better ones - much less coarse grained than something like Turing Test, which amounts to "species X is fooled by it!". We have to face that very likely various things that can be produced by cognitive systems - like our brains - can be produced by very different cognitive systems and by non-cognitive systems. Like coherent language.

We are too easily fooled, it’s too easy to produce similar behavior with wildly different principles behind it, and whether a system is conscious and sentient will be ethically very relevant. We too easily anthropomorphize our pets, assign agency to inanimate objects and project intent onto systems. Looking purely at behavior is never going to fly to assess internal constitution of a system, its moral status, personhood or what it really does and how it processes its input to generate the output.

So, in conclusion, the issue is not that a brain, organic matter or carbon can only support consciousness - it’s that all evidence points to consciousness being physically realized in a manner that does not translate to software sitting in a stack of abstraction layers.

It’s true that in philosophy there are a lot of things to dive into w.r.t. consciousness and each paragraph of this post could be met with a hefty philosophical objection myself. There would be no end to the objections. Is reality even real? Are we living in a simulation?

Perhaps unsurprisingly, the most exotic ideas may not be crucial considerations right now. Not if we just want to focus on how to build consciousness and move forward on the evidence. In fact, in my opinion the evidence is such that we can remain agnostic on many philosophical issues while focusing on how to replicate based on what we know.

I think it turns out you can get very far just by looking at what we do know and when you synthesize all of that you start to paint a pretty clear picture - bit by bit. So what more do we know about consciousness and what does painting that picture look like? That’s for a future post.

These kinds of arguments are never clear exactly what features of the physical implementation are necessary for consciousness. It's true that the appropriate physical architecture is required for certain functions, but this is true when just considering information processing. For example, a feed-forward computation has different information dynamics than a computation with feedback loops, and such dynamics requires support from the physical implementation. But given some abstract properties that support feedback dynamics, the specifics of the physical implementation are irrelevant.

If your argument is just that physical implementation matters in virtue of its functional/information dynamics, then I agree. But then the emphasis on physical realizer in opposition to abstract properties is misplaced. On the other hand, if the argument is that certain metaphysical properties of the substrate are necessary, then onus is on you do identify exactly the right property. But trading on a supposed distinction between abstract properties of computation and physical properties of the implementation is a mistake. A physical system computes some function in virtue of its abstract properties. These abstract properties include the temporal relationship between said properties, i.e. the causal structure intrinsic to the abstract formal system being implemented. But highly abstracted computational systems implement this causal relationship just the same as a physical implementation with no layers of abstraction in between.

Neuroscience provides important evidence for how the form of brains relates to the function of minds. But the issue is what are the explanatory features of the brain for its ability to generate the function of mind. Explanations in terms of computational dynamics have borne significant fruit. But computational dynamics is not dependent on substrate except as it admits certain information dynamics within the space of supported behaviors. If one is to suppose that the physical substrate is essential, one must explain exactly how.

The argument here challenges the tendency to assume that AI models like large language models (LLMs) have consciousness simply due to their advanced processing capabilities. This perspective highlights that consciousness in humans arises from intricate physical processes, deeply rooted in neural structures and complex feedback loops that are absent in digital hardware. The assumption that LLMs might be conscious ignores the physical substrates required for consciousness—like a brain’s dynamic, interconnected architecture capable of generating awareness, feelings, and experiences.

The author argues that digital computation, designed for abstraction and functionality, lacks the foundational structure for consciousness. Just as simulating gravity doesn’t create real gravitational force, simulating consciousness doesn’t equate to real awareness. This viewpoint emphasizes the need for engineering approaches that consider the physical foundations of consciousness, rather than abstract software simulations. It’s a call for cautious optimism grounded in scientific rigor, rather than philosophical speculation or anthropomorphic projections onto technology.

Refer https://talenttitan.com